Long-Horizon World Exploration

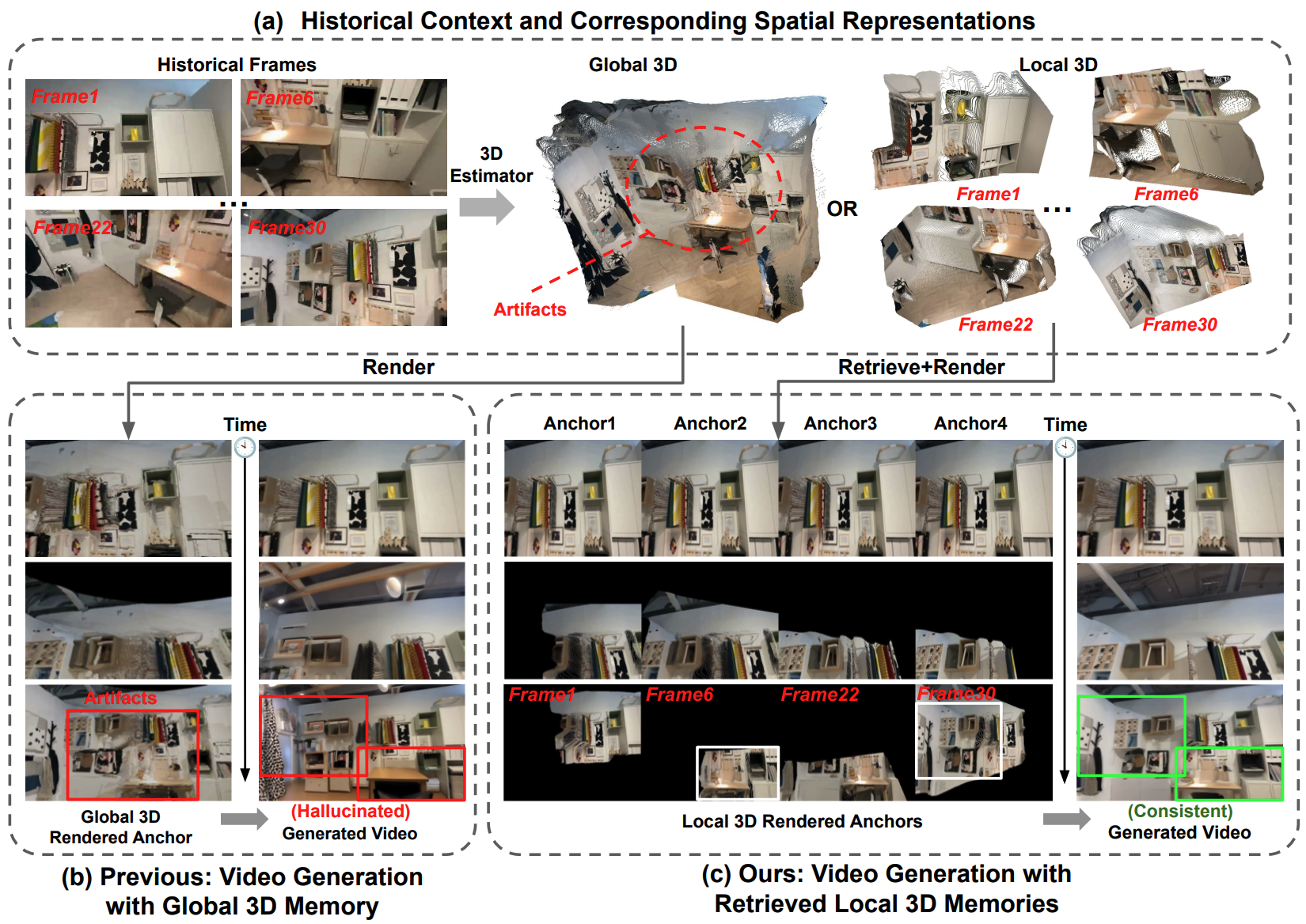

Maintaining spatial world consistency over long horizons remains a central challenge for camera-controllable video generation. Existing memory-based approaches often condition generation on globally reconstructed 3D scenes by rendering anchor videos from the reconstructed geometry in the history. However, reconstructing a global 3D scene from multiple views inevitably introduces cross-view misalignment, as pose and depth estimation errors cause the same surfaces to be reconstructed at slightly different 3D locations across views. When fused, these inconsistencies accumulate into noisy geometry that contaminates the conditioning signals and degrades generation quality.

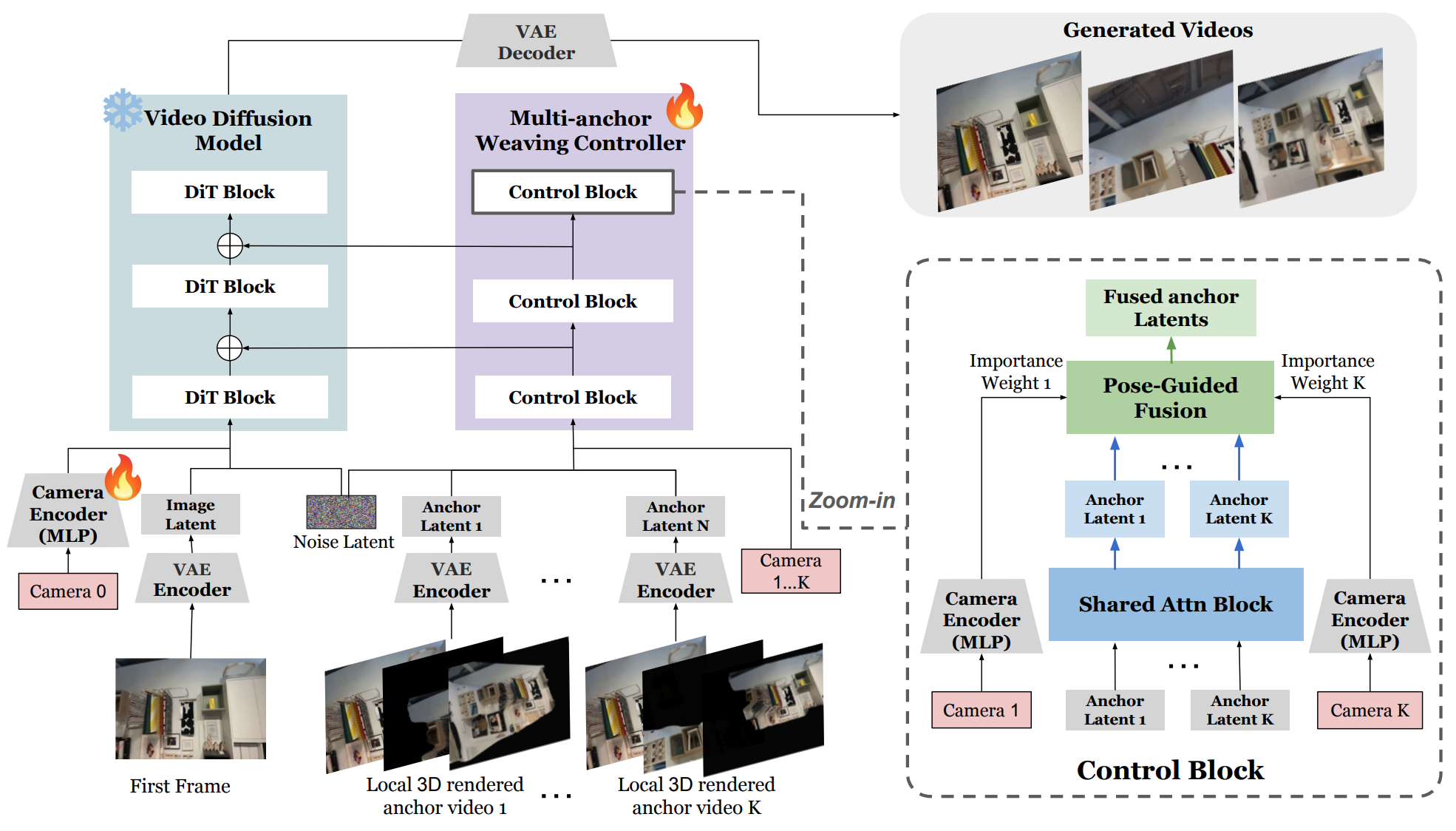

We introduce AnchorWeave, a memory-augmented video generation framework that replaces a single misaligned global memory with multiple clean local geometric memories and learns to reconcile their cross-view inconsistencies. To this end, AnchorWeave performs coverage-driven local memory retrieval aligned with the target trajectory and integrates the selected local memories through a multi-anchor weaving controller during generation. Extensive experiments demonstrate that AnchorWeave significantly improves long-term scene consistency while maintaining strong visual quality, with ablation and analysis studies further validating the effectiveness of local geometric conditioning, multi-anchor control, and coverage-driven retrieval.

Global 3D reconstruction accumulates cross-view misalignment, introducing artifacts in the reconstructed geometry ((a), middle), which propagate to the generated video as hallucinations ((b), red boxes). In contrast, per-frame local geometry inherently avoids cross-view misalignment and therefore remains clean ((a), right). Conditioning on multiple retrieved local geometric anchors, AnchorWeave maintains strong spatial consistency with the historical frames ((c), white and green boxes).

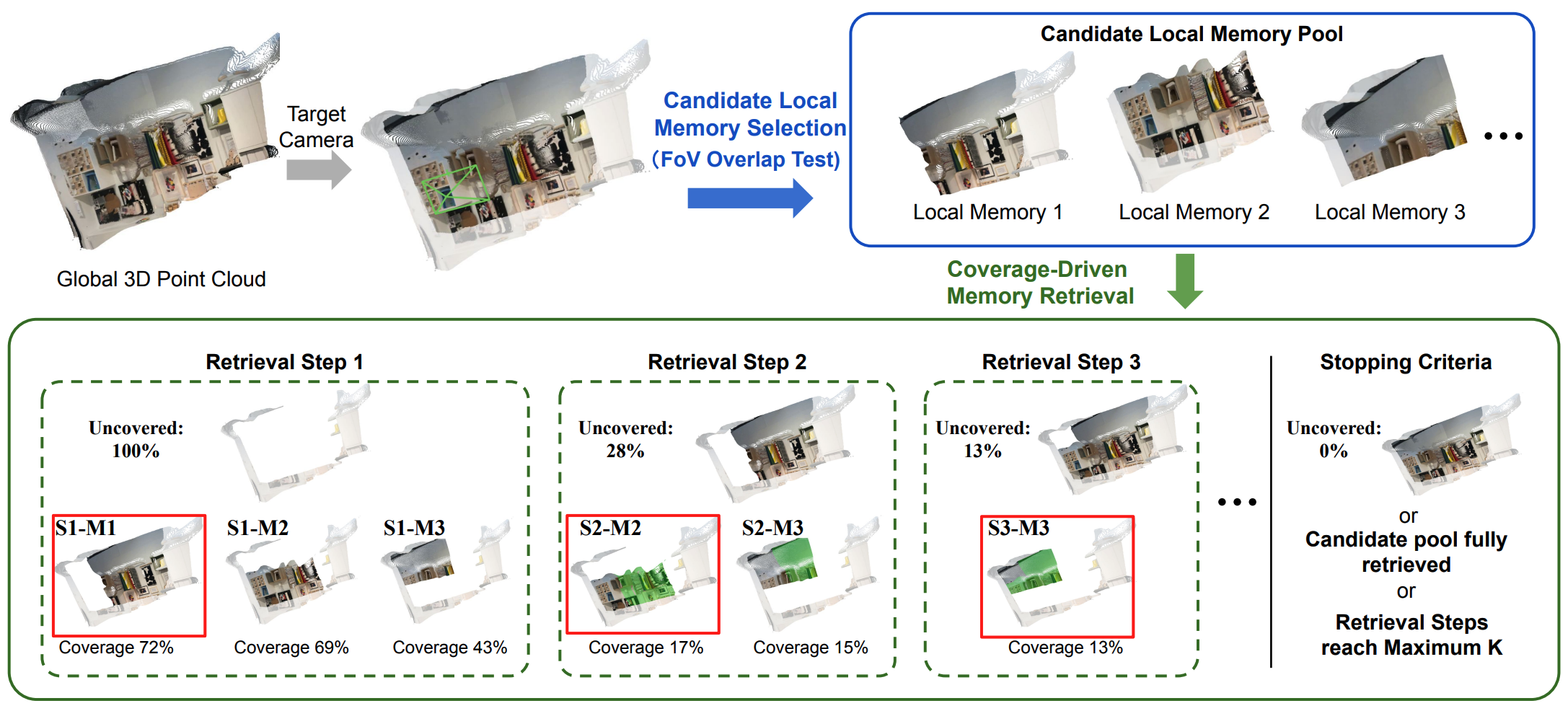

Given a target camera, we first select local memories whose camera FoVs partially overlap with the target camera view to form a candidate memory pool. At each retrieval step, we greedily select the memory that maximizes the newly covered visible area. Points invisible to the target camera are shown in gray. Si–Mj denotes memory j selected at retrieval step i, and the red box indicates the retrieved memory. In Si-Mj, regions already covered by previously retrieved memories are highlighted in green and only newly covered regions retain their original RGB colors. No green regions appear in S1-Mj since 1st-step's coverage is empty. Retrieval terminates when the uncovered region is 0%, the retrieval budget K is exhausted, or the remaining memory pool is empty. For clarity, coverage is computed with a single frame here, while in practice is aggregated over multiple frames per chunk.

Anchors are encoded and jointly processed by a shared attention block, followed by camera-pose-guided fusion to produce a unified control signal injected into the backbone model. Camera 1 to K represent the retrieved-to-target camera poses for the 1 to K anchor videos, where each denotes the relative pose between the camera associated with a retrieved local point cloud and the target camera, measuring their viewpoint proximity. Camera 0 is the relative target camera trajectory.

@article{wang2025anchorweave,

title={AnchorWeave: World-Consistent Video Generation with Retrieved Local Spatial Memories},

author={Wang, Zun and Lin, Han and Yoon, Jaehong and Cho, Jaemin and Zhang, Yue and Bansal, Mohit},

journal={arXiv preprint},

year={2025}

}